The word "experimentation” can sound distant — even intimidating — especially if you don't come from the world of data or product. But you don't need advanced statistics to get started. What you do need is curiosity, intention, and a bit of method.

"What if, instead of showing the button first, we waited until after users explored?"

“What if we reordered the options based on customer type?"

“What if we only messaged people who hadn't interacted in the last 30 days?"

We've all asked questions like these. But often, we make decisions without knowing whether we're actually improving something — or just changing for the sake of it.

On June 17th, at the event "Design + Data: Creating a Culture of Experimentation", we came together to seriously discuss how to stop guessing. We shared experiences with leading voices like Erin Weigel (former Principal Designer at Booking.com and now advisor at AB Smartly), Márcio Martins (CTO at AB Smartly), and Rodrigo Castillo (Applied Scientist at Neuralworks).

And we left with one thing clear:

Experimentation isn't just a technical tool — it's also a mindset.

And most importantly, it's a way to ask better questions and move in the right direction.

But what does it really mean to experiment?

When making data-driven decisions, it's tempting to compare "before and after" metrics — or to look at what happened during the same period last year. This strategy seems intuitive: if things improved after we made a change, it must have worked... right?

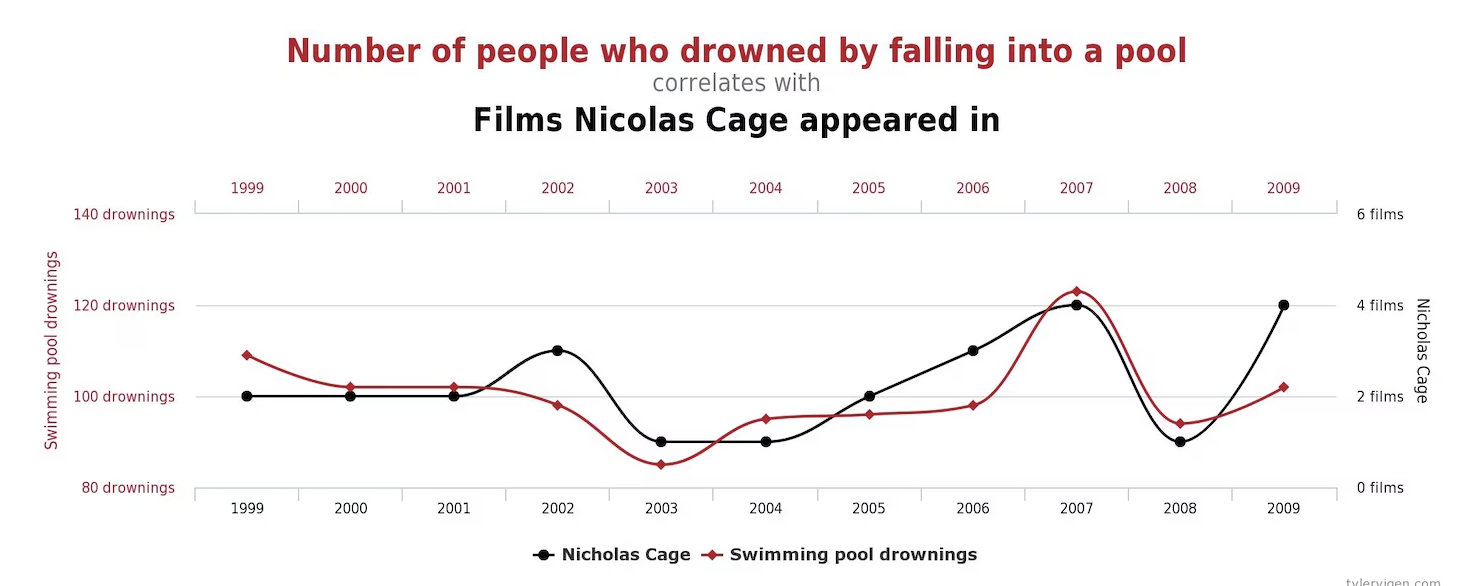

The problem is that these kinds of comparisons ignore other variables that could also have influenced the outcome. And when that happens, the risk of drawing the wrong conclusions — or worse, making bad decisions — goes way up. This happens when we confuse two fundamental ideas in causal inference: Correlation is not (and does not imply) causation.

Figure 1: Overlapping time series of two unrelated variables — the number of people who drowned in swimming pools and the number of films Nicolas Cage appeared in. Taken from Spurious Correlations by Tyler Vigen, the chart illustrates a key point: correlation does not imply causation.

So how can we tell if something we did actually had an impact? This is where causal inference comes in — a rigorous way of answering a key question: Did this change cause the outcome, or was it just coincidence?

Put simply, inferring causation means comparing what happened with what would have happened if we hadn't made that change. Since we can't observe both worlds at the same time, we use strategies to estimate that alternative scenario — the counterfactual.

One of the most robust ways to do this is through experimental design, where we create two comparable groups: one that receives the intervention (the treatment), and one that doesn't. That way, any difference in outcomes can be attributed to the change, not to chance or pre-existing differences.

In this setup, a sample of users is randomly assigned into two groups: one (Group B) receives the treatment — a new feature or message, for example — and the other (Group A) doesn't. Thanks to randomization, any observed differences in outcomes can be causally attributed to the treatment — not to prior differences between the groups.

This gives us a point of comparison that's free from bias caused by hidden variables. And in a world full of high-stakes decisions, that's no small thing.

Why experiment?

Not experimenting comes at a cost. And we're not just talking about mistakes — we're talking about wasted time, missed opportunities, lost revenue, and frustrated users.

Erin reminded us of an uncomfortable truth:

“Without evidence, many product decisions end up based on the strongest opinion in the room.”

What we aim for with a culture of experimentation is to replace that opinion with something far more valuable: structured curiosity. It's what helps us deliver continuous value in every iteration — and reduce the risk behind each decision. Whether it's nudging a button a few pixels for better visibility or changing the personalization logic that defines what each user sees, small and large choices alike deserve to be tested.

One of the most powerful quotes from the event came from Erin herself:

"We design to learn, not just to validate.” – Erin Weigel

When we make product, design, or business decisions without evidence, we're assuming our intuition is enough. But what if we're wrong? What does a bad decision actually cost?

Not experimenting has a price — and it's not just about mistakes. It's about lost conversions, frustrated users, and teams pulling in different directions.

As highlighted in an article from Harvard Business Review, many companies spend millions on redesigns, consulting, or marketing campaigns... with no real evidence that those decisions will work. Because when you don't experiment, decisions get made based on intuition, hierarchy, or trend — not data.

"Often, businesspeople think that running experiments is a costly undertaking... but it's prohibitively expensive not to experiment.”

— Neil Bendle, The Costs of Not Experimenting

Intuition can spark great ideas. But if it's driving decisions on its own, it can also destroy value. A single untested change — a misplaced message, a misprioritized feature — can undo weeks of work. And the worst part? Most of the time, we don't even notice. We just assume: “it didn't work."

From Simple to Sophisticated: How We Experiment Today

More and more companies are investing in the democratization of experimentation. This means it's no longer just the responsibility of the data or product team — designers, developers, and even commercial areas are starting to get involved. And that's great news.

But not all forms of experimentation are created equal. We can think of this evolution as a path with different levels of maturity:

→ Level 1: Structured Intuition

“A marketing team at a travel company began testing different versions of the banner order on their main site. While there was no randomization, the exercise helped them shift toward a more conscious practice: moving away from team preferences, and starting to iterate based on clear hypotheses.”

In this case, a hypothesis is formulated, simple metrics are defined (like clicks or purchases), and different versions are tested with different audiences. While it's a solid starting point, this approach comes with two major challenges:

- There's usually no random assignment.

- Differences in outcomes are not always statistically validated — which can lead to poor decisions.

→ Level 2: Tests A/B

“An airline company A/B tested a small variation: showing the benefits of its loyalty program in the purchase confirmation email. While it seemed like a minor tweak, the test revealed a significant increase in registration rates — validating the impact of targeting that channel early in the journey."

At this stage, we're talking about well-designed experiments:

- Versions are randomly assigned to users.

- Statistical tests are used to determine whether observed differences in the metrics are real or just noise.

This approach gives us greater confidence when estimating impact. If one group sees version A and another sees version B — and the first group converts more — we can reasonably attribute that difference to the change itself.

→ Level 3: Machine Learning + Experimentation

In more advanced contexts, machine learning models are used to dynamically personalize the experience. For example:

“A technical team tested a personalization model that recommended different promotions based on passengers' browsing history and preferred routes. When comparing this version to a standard one through a controlled test, they observed clear improvements in engagement and in the intent to purchase additional segments."

So, what's being tested here? The impact of a personalized system versus a static version. Once again, the A/B test plays a central role — this time comparing an automated model to a fixed baseline.

This evolution reflects not just greater technical sophistication, but also an organizational culture that embraces uncertainty as part of the design process. Experimenting well — with method, ethics, and a user-centered mindset — becomes a shared capability. And that's where real transformation happens.

Of course, this kind of advanced experimentation is not the starting point, nor is it necessary in every case. Integrating personalization models only makes sense when there's already a strong data foundation, enough traffic, and a culture that supports rapid hypothesis testing.

The goal isn't to rush to the most complex setup — it's to build trust and capabilities at every step of the way.

Culture Before Technology

"Concepts don't fail — execution does. That's why we need leadership that creates space to experiment with discipline." — Marcio Martins, Panel OpenTalks LATAM

Embracing a culture of experimentation is not a technical decision — it's a cultural one. Dashboards, scripts, or platforms aren't enough. What truly makes the difference is how you and your team think, talk, and make decisions around evidence.

A mature experimental organization understands that technology is a means to an end — not the driver. That's why — beyond the stack — what really matters is this:

✓ Cross-functional communication: Designers, data scientists, developers, business stakeholders — everyone should have space to pose hypotheses, iterate ideas, and share learnings without fear. Collective exploration is essential.

✓ Aligned objectives: If every team defines success differently, no experiment will survive. Everyone needs to understand what's being tested, why it matters, and how to use the insights to make better decisions.

✓ Strategic collaboration: Experimentation isn't a siloed practice — it's a shared process that connects diverse roles. When design, data, and business work together, decisions stop being opinions and become hypotheses that can be tested and validated.

But putting this into practice isn't always easy.

It's not uncommon for experiments to run into misunderstood urgencies (“we don't have time to test"), egos ("this is going to work — trust me"), or decisions made before even looking at the data.

Often, what blocks experimentation isn't a lack of tools — it's the fear of failing in public. The fear of showing that a strongly defended intuition... didn't have the effect we hoped for.

Changing that takes more than platforms or processes. It requires psychologically safe spaces — where questioning isn't seen as resistance, and failure is part of the learning process.

That's where leadership plays a key role.

Because at the end of the day, the true infrastructure of experimentation isn't technical — it's human. And if the culture isn't ready, no A/B test will save your product.

Experimentation as a Pillar of Product Development

At its core, this is about building testing and learning into the development process from the very beginning — not as a final checkpoint, but as a guiding principle that shapes everything from the first hypothesis to the final decision.

This logic unfolds as a four-stage cycle:

- Hypothesis: What do we know? What do we believe will happen? What do we want to learn? What decisions would we make depending on the outcome?

- Experimental design: How will we run the test? Will we randomize by user, session, or geography? What metrics will we track? What sample size do we need?

- Testing: We launch the experiment, monitor its behavior, and make sure the implementation is clean and doesn't contaminate the data.

- Decision and iteration: We analyze the results, make informed decisions, and move on to the next hypothesis. We learn, refine, and repeat.

As the diagram shows, it's a cyclical, continuous process. The key isn't just running the test — it's what we do with what we learn.

Rodrigo summed it up perfectly during the conversation:

“Experimenting isn't about guessing better. It's about isolating effects, reducing bias, and making decisions with precision.”

Because in the end, experimentation is about taking learning seriously — not to prove we're right, but to discover what works and why, one decision at a time.

Ask Better, Move Smarter

If you're reading this and thinking, “My team's not ready yet,” the truth is, you don't need to be ready. You just need to start.

Experimentation isn't about having the perfect tool. It's about building a habit: asking better questions and letting the evidence answer.

“Conversion-oriented design isn't a trick. It's about having the courage to look at what's really happening." – Erin Weigel, Designing for Impact

So the next time you think “We don't have time to experiment," ask yourself:

Can we really afford to move forward blindly?

Cover: Tangram by Adolfo Álvarez. Two dogs, both made from the same set of shapes.