Everyday Chaos: Why Personal Finance Feels Like a Part-Time Job

Fuel, tolls, parking, rent, groceries, electricity, half-a-dozen subscriptions—every charge lands on a different day and is split across multiple bank accounts, credit cards and fintech wallets. To the best of my knowledge, no Chilean bank exposes an Open Banking API (for natural persons), which means tracking those pesos still requires downloading statements, pasting them into a spreadsheet and hand-tagging each line—often squinting at vague transaction descriptions that are hard to decode once the moment has passed—just to answer: “How much did I spend this week?”

The Open-Banking Gap—and Why It Matters

In other countries, finance apps can be plugged directly into bank APIs to update your budget in real time (YNAB supports Direct Import in the USA, Canada, and a list of european countries). In Chile, you get work-arounds: Piggi asks you to upload PDF statements; YNAB requires manual CSV imports; most people default to Excel. The result is slow, error-prone, and anything but “real time.” Expense Tracker API exists to eliminate that friction—providing Chileans the automatic transaction feed their banks won’t.

Building a Real-Time Expense Tracker via Email and LLMs

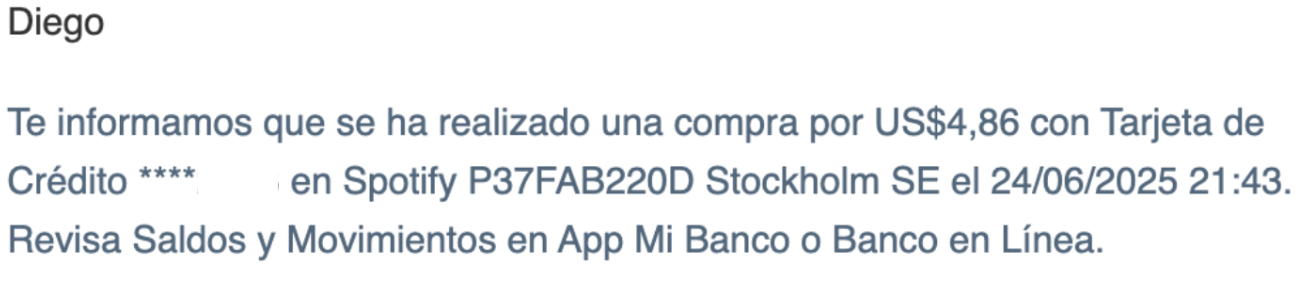

This is where the Expense Tracker API comes in – a cloud-native backend that tackles the problem head-on by leveraging something almost every bank does provide: email notifications. Instead of waiting for open banking, this project turns your inbox into the integration point. Banks in Chile commonly send emails for credit card purchases, account debits, and bill payments. These emails contain all the relevant info – amounts, merchants, dates, maybe the last digits of the card – but in free-form text meant for humans. The key idea of this project was to use an AI language model to read those emails the way a human would, and extract structured data from them automatically.

How does it work? In simple terms: Email comes in → AI parses it → Expense gets logged. The implementation uses AWS cloud services to tie everything together. When a bank sends a transaction alert email, it’s forwarded (using a forwarding rule in my personal Gmail account) to an AWS email endpoint (using Amazon SES) which triggers an SNS message. That message invokes a serverless function (AWS Lambda) in real-time, passing along the raw email content. Inside the Lambda, an AI does the heavy lifting: it calls an Anthropic Claude language model via Amazon Bedrock to understand the email and pull out the important fields – the amount spent, the merchant or payee, a description or category, and which card or account was used. In essence, the AI reads the email for you and returns a neat JSON with the transaction details. No manual input, no web scraping, no official API – just your existing email data put to work in a smarter way.

The beauty of using an LLM here is flexibility. Chilean banks produce a variety of email formats and often in Spanish. Writing custom parsers for each type of email is a fragile solution; one format change and your regex breaks. But a well-prompted LLM can handle variability and even interpret context in Spanish naturally. In this project, Claude is given a description of the task (“extract expense info from the email content”) and even a little tool schema defining the expected output structure (amount, merchant, name, card type). The model then responds with a JSON snippet following that schema. For example, if the email says “Compra de $15.000 en SUPERMERCADO XYZ con tu tarjeta Visa...”, the AI can return something like this:

{

"amount": 15000,

"merchant": "Supermercado XYZ",

"name": "Compra supermercado",

"card": "credit"

}The Lambda function takes that result and stores it, which brings us to the tech stack details.

Once the AI extracts the expense data, the backend immediately stores the entry. The design follows a serverless architecture on AWS: an email coming in triggers a Lambda function that formats the data and saves it in a database. Each expense record includes fields like amount, merchant, a user-defined name/label, and the card type used. To allow flexible usage, the data is saved in two forms:

- Structured Database – Every expense is saved to a DynamoDB table (a NoSQL, key-value and document based cloud database) with attributes for year and month, enabling efficient querying by date or building reports. For example, one could query all expenses in January 2025 or filter by merchant, supporting future budgeting and analytics features.

- CSV Archive – Each expense is also appended to a CSV file stored in an S3 bucket, organized by year/month (e.g. a file for 2025/01). This provides a simple way to download or inspect all transactions in a spreadsheet format, ensuring the user always has an easily accessible log of their data.

Technical Highlights: SST, AWS Lambda, DynamoDB, S3, and Claude AI

From my perspective as a developer, this project was a great opportunity to explore new tools in a practical way. It was my first time using Serverless Stack (SST) to deploy infrastructure-as-code, and also the first time working with Amazon Bedrock's LLMs, using a simple tool definition to extract structured data from emails. Here are some of the key technical aspects and how I implemented them:

- Infrastructure as Code with SST: SST is a framework that streamlines building serverless apps on AWS. With SST, we defined all resources in TypeScript code – the API endpoints, the database, storage, and the email processing pipeline. This made the deployment and iteration process much smoother for a cloud newbie. The project’s config declares, for example, an S3 bucket, a DynamoDB table, and an SNS topic in just a few lines of code.

Here’s a code snippet showing how to set up the email processing pipeline: it creates an SNS topic, subscribes a Lambda handler that uses Claude via Bedrock to parse transaction emails, and links the necessary S3 bucket and DynamoDB table for storage:

// infra/emailService.ts

import { bucket, expensesTable } from './storage'

export const emailTopic = new sst.aws.SnsTopic('EmailTopic')

emailTopic.subscribe('ProcessEmailSubscription', {

handler: 'packages/functions/src/emailService.handler',

permissions: [

{

actions: ['bedrock:InvokeModel'],

resources: [

// Public model ARN (Claude 3 Sonnet) – no account ID needed

'arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-3-sonnet-20240229-v1:0',

],

},

],

link: [bucket, expensesTable],

})

Embracing SST for this project meant we could treat infrastructure as part of our application code, which was both a learning curve and a revelation in terms of efficiency.

- Serverless Architecture (AWS Lambda + API Gateway): The app logic runs on AWS Lambda functions, making it cost-efficient and scalable from day one. There are two main Lambda flows: one for a REST API and one for email processing. The REST API (exposed via API Gateway) includes endpoints like POST /expenses for manually adding an expense (useful for any transactions that might not come via email) and a GET /health for basic status. The more interesting Lambda is the email processor, which is subscribed to the SNS topic that receives incoming emails. Because it’s serverless, the system can handle a burst of emails or sit idle without costing a thing when there’s no activity. This cloud-native design – using managed services instead of running servers – means we don’t worry about uptime or scaling, and we only pay per use.

- Data Storage with DynamoDB and S3: I went with a dual-storage setup: DynamoDB for fast querying and S3 for CSV exports. Each expense is saved in DynamoDB with metadata like year, month, and a unique ID—making it easy to filter and run reports. At the same time, I append every record to a monthly CSV in S3. This gives me flexibility: I can run queries via code or just download a CSV and open it in Excel. Both the table and bucket are defined via SST, so wiring them into the Lambda functions was straightforward. Using Dynamo also sets the foundation for future features like near real-time notifications—since each new record can trigger downstream processing or alerts with minimal latency.

- AI Integration with Amazon Bedrock and Claude: For parsing the raw email content, I used Claude via Amazon Bedrock—my first time working with a Bedrock-hosted LLM. The Lambda sends the raw text and a simple tool definition to extract fields like amount, merchant, and card type. Claude handled Spanish emails and varying formats with no problem. One of my original goals was to also classify each expense (e.g., “food”, “car”, “rent”), and while I didn’t finish that part, this setup lays the foundation to add it with just a prompt change. Bedrock made the AI part easy—I didn’t have to host or scale anything, just pay per request.

Altogether, this stack gave me just the right balance between flexibility, scalability, and simplicity. I was able to prototype a real-time expense tracking backend in a matter of hours, using modern cloud tools and AI without overengineering. For anyone curious about SST, serverless architecture, or Bedrock-based LLMs, this kind of project is a great entry point—it’s small enough to be approachable, but real enough to be useful.

While this version runs fully on AWS—leveraging Lambda, Bedrock, DynamoDB, and S3—the architecture itself is flexible. At its core, it’s just an event-driven pipeline that ingests unstructured messages, extracts structured data using an LLM, and stores it. That same pattern could be replicated using other cloud providers: Cloud Functions and Firestore on GCP, or Azure Functions with Cosmos DB, depending on your stack and preferences. The tech choices here aren’t prescriptive—they’re just one way to solve the problem quickly.

How This Approach Compares to Piggi, YNAB, and Manual Tracking

Most expense tracking tools in Chile fall into two camps. Piggi, for example, helps categorize your spending—but only after you manually download and upload your bank statements. It’s useful, but the process is still manual and happens in batches, not in real time. You have to remember to do it regularly, or your data falls behind.

YNAB (You Need A Budget) works great in countries where it can connect to your bank through services like Plaid. But in Chile, that connection doesn’t exist, so you end up entering every transaction by hand or importing CSVs. Some people like the manual approach for control, but for most, it’s hard to keep up.

This project sits somewhere in between: it offers automated, real-time syncing without needing access to your bank. Just forward your transaction emails, and the system logs them as they happen—no statements to download, no CSVs to import. And because it’s open-source and cloud-based, you can run it on your own AWS account with full control over your data.

Conclusion: A Technical Fix for a Personal Pain (Even If It Doesn't Work for Me Yet)

Building this project was both a technical experiment and a personal attempt to solve a real-world problem. Right now the codebase is still pretty scrappy—messy enough that I’d be a bit embarrassed to make the repo public until I’ve refactored it. It let me dive into SST, AWS Lambda, and Bedrock-hosted LLMs in a focused, applied way—tying together infrastructure, automation, and AI in a compact, useful system.

Ironically, my own bank doesn’t send email notifications (I realized this only after I’d finished a working version of it), so I can’t fully use the system I built. But that just makes the next steps more interesting: setting up a dashboard to visualize expenses, adding category classification, and automating Gmail forwarding with tools like Apps Script or Zapier. There’s also room to support other sources like SMS, PDFs, or even scraping mailboxes directly. What started as a weekend build has turned into a foundation for a much larger system—and anyone who faces similar banking limitations can build on it too. Have advice, crazy feature ideas, or war stories to share? I’m all ears—drop me a note and let’s make this better together.